This post is Part 4 of my series of posts on Index Maintenance for a DBA. So here goes.

Enough has been said & talked about regarding missing indexes in SQL Server. Before you read any further, it is very important to understand that “missing indexes” that your colleagues & friends talk about are really missing index “recommendations“. The keyword is the recommendations part. Take the information in this post as a whole and don’t go crazy creating every missing index recommended when you run the query given below. Refer to my earlier posts in the indexing series (duplicate, unused indexes) to know why it is a bad idea to go “index-crazy”. You need to carefully evaluate the benefits of having an index vs the cost of maintaining it.

Some Background

SQL Server provides exposes index recommendations via DMV’s (sys.dm_db_missing_index_details, sys.dm_db_missing_index_groups and sys.dm_db_missing_index_group_stats). These dynamic views contain information collected by the SQL Server Query optimizer engine as your DB workloads and queries execute on the database engine. Since this is “dynamic” information, it is only as relevant as your last SQL Service restart. So this is something that needs to get into your blood as a DBA to check for periodically and is NOT a one-off activity.

Things to Consider & Understand before implementing Missing Index Recommendations

Your boss asks you if this index thingy will improve the performance of the database. The favourite DBA response “It Depends” 😀

Well, it really does! I’ll explain why… Like any other recommendations you need to take into account additional information along with this data to come to a conclusion. Consider for example the following:

- Has your DB workload changed since the time you collected the missing index recommendations? (i.e. Has there been an application release or DB schema upgrade since)

- Are there similar existing indexes on the table where it’s recommending you create another index? (Maybe you just need to add a few additional columns to the existing index as included columns to “cover” it…)

- Is the query batch or workload very frequently run, enough that the impact is very high if you create this index? (Refer to the last_user_seek and last_user_scan columns to find out when a particular query last used these columns for seek/scan operations)

- Do you have enough space in the DB/disk to afford yet another index? Especially important, if it’s a large table. (DBA 101 question, I know!)

- If you create this index and it’s rarely used, you are increase the maintenance overhead and also slowly down WRITE operations on the table.

IT DEPENDS, really it does!

Missing Index – Improvement Measure Calculation Logic

To help answer some of these questions, I calculate an arbitrary number to determine the “usefulness” of a new index, called improvement measure. The higher this number, the more useful it is to create it. The improvement measure is calculated based on 4 factors

- Average cost of the user queries that could be reduced by the new index. This is the current cost of the query.

- Average impact on the query if this index were created. i.e. The query cost would on average drop by this percentage value.

- Number of Seek operations that this index could have been used by user queries.

- Number of Scan operations that this index could have been used by user queries.

Now that you understand what missing indexes are, factors to consider and the logic behind the recommendations, go ahead and run the below query on your databases. The output provides you the “CREATE INDEX” T-SQL statement that you can use to create the index, if you see fit after considering all factors mentioned so far. This saves you time having to write the T-SQL yourself. The CREATE INDEX statement will include the index key columns in order, suggest included columns where applicable.

Script – Find Missing Indexes Recommendations in SQL Server

USE [msdb] /*Replace with your Database Name */ GO SELECT TOP 50 GETDATE() AS [RunTime], DB_NAME(mid.database_id) AS [DBNAME], OBJECT_NAME(mid.[object_id]) AS [ObjectName], mid.[object_id] AS [ObjectID], CONVERT (decimal (28,1), migs.avg_total_user_cost * migs.avg_user_impact * (migs.user_seeks + migs.user_scans)) AS [Improvement_Measure], 'CREATE INDEX missing_index_' + CONVERT (varchar, mig.index_group_handle) + '_' + CONVERT (varchar, mid.index_handle) + ' ON ' + mid.statement + ' (' + ISNULL (mid.equality_columns,'') + CASE WHEN mid.equality_columns IS NOT NULL AND mid.inequality_columns IS NOT NULL THEN ',' ELSE '' END + ISNULL (mid.inequality_columns, '') + ')' + ISNULL (' INCLUDE (' + mid.included_columns + ')', '') AS [CREATE_INDEX_Statement], migs.user_seeks, migs.user_scans, migs.last_user_seek, migs.last_user_scan, migs.avg_total_user_cost, migs.avg_user_impact, migs.avg_system_impact, mig.index_group_handle, mid.index_handle FROM sys.dm_db_missing_index_groups mig INNER JOIN sys.dm_db_missing_index_group_stats migs ON migs.group_handle = mig.index_group_handle INNER JOIN sys.dm_db_missing_index_details mid ON mig.index_handle = mid.index_handle WHERE CONVERT (decimal (28,1), migs.avg_total_user_cost * migs.avg_user_impact * (migs.user_seeks + migs.user_scans)) > 10 AND mid.database_id = DB_ID() ORDER BY [Improvement_Measure] DESC GO

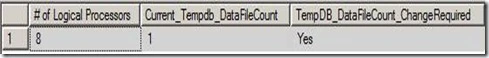

Script Result

Here is a sample output from my test system showing all missing indexes recommended on the MSDB database.

Test, Test, Test!

Did I mention that you must test these index recommendations on your Test system? If you know your application workload/query, you must test to gauge the impact of having this index.

Keep in mind, your testing must not be to run just a single batch/query, as 95% of the time this will yield a positive result. But, you must include other sample workloads along with your specific query. Why? Because, indexes are structures on the table not specific to just your query. Other queries can use it too and SQL has to keep the index up to date when data changes happen to the table. Always test any index changes with as close to a real world production like workload as possible. You don’t want any surprises on Production now, do you? 🙂

Well SQL folks, this concludes my indexing series of posts for DBA’s. Leave your comments down below if you find this useful and would like more material on these lines.

-Sudarshan (TheSQLDude)